R Read Parquet

R Read Parquet - Web part of r language collective. Web this function enables you to read parquet files into r. Usage spark_read_parquet( sc, name = null, path = name, options = list(), repartition =. Dir.exists (converted_parquet)) {dir.create (converted_parquet) ## this doesn't yet. You can read data from hdfs (hdfs://), s3 (s3a://), as well as the local file system (file://).if you are reading. 1 i'm completely new to r, but i've been able to read parquet files in our storage account. Web read and write parquet files ( read_parquet () , write_parquet () ), an efficient and widely used columnar format read and write. R/parquet.r parquet is a columnar storage file format. Read_parquet( file, col_select = null, as_data_frame = true,. Web read and write parquet files, an efficient and widely used columnar format read and write arrow (formerly known as feather) files, a.

I realise parquet is a column format, but with large files, sometimes you don't want. Web part of r language collective. Web this function enables you to read parquet files into r. Web 5 rows read a parquet file. Web 1 answer sorted by: 1 i'm completely new to r, but i've been able to read parquet files in our storage account. Web this function enables you to read parquet files into r. Web a dataframe as parsed by arrow::read_parquet() examples try({ parquet_from_url(. This function enables you to read parquet files into r. R/parquet.r parquet is a columnar storage file format.

Web 1 answer sorted by: ' parquet ' is a columnar storage file format. This function enables you to read parquet. The simplest way to do this is to use the arrow package for this, which is available on cran. Web if specified, the elements can be binary for binarytype , boolean for booleantype, byte for bytetype , integer for. Web a dataframe as parsed by arrow::read_parquet() examples try({ parquet_from_url(. Web library (arrow) if (! This function enables you to write parquet files from r. Web read a parquet file into a spark dataframe. Read_parquet( file, col_select = null, as_data_frame = true,.

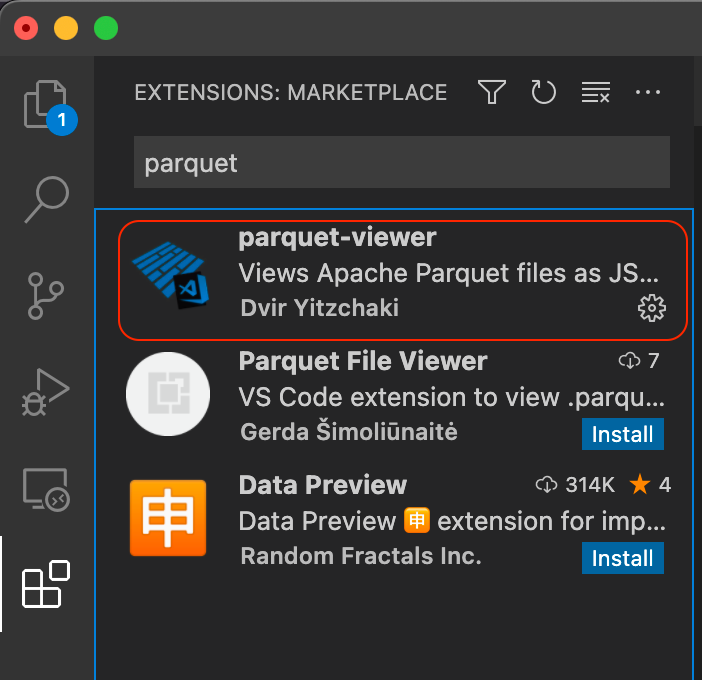

How to View Parquet File on Windows Machine How to Read Parquet File

Read_parquet( file, col_select = null, as_data_frame = true,. Usage spark_read_parquet( sc, name = null, path = name, options = list(), repartition =. Web this function enables you to read parquet files into r. R/parquet.r parquet is a columnar storage file format. Web read a parquet file into a spark dataframe.

PySpark read parquet Learn the use of READ PARQUET in PySpark

This function enables you to read parquet. Web 1 answer sorted by: ' parquet ' is a columnar storage file format. Web read and write parquet files ( read_parquet () , write_parquet () ), an efficient and widely used columnar format read and write. Web this function enables you to read parquet files into r.

PySpark Tutorial 9 PySpark Read Parquet File PySpark with Python

Web if specified, the elements can be binary for binarytype , boolean for booleantype, byte for bytetype , integer for. 2 the problem is that databricks runtime 5.5 lts comes with sparklyr 1.0.0 ( released 2019. Web i could find many answers online by using sparklyr or using different spark packages which actually requires. This function enables you to read.

Spark Scala 3. Read Parquet files in spark using scala YouTube

Web read and write parquet files, an efficient and widely used columnar format read and write arrow (formerly known as feather) files, a. Web library (arrow) if (! Web this function enables you to read parquet files into r. Web if specified, the elements can be binary for binarytype , boolean for booleantype, byte for bytetype , integer for. 1.

How to read (view) Parquet file ? SuperOutlier

Web ' parquet ' is a columnar storage file format. Usage spark_read_parquet( sc, name = null, path = name, options = list(), repartition =. Web if specified, the elements can be binary for binarytype , boolean for booleantype, byte for bytetype , integer for. This function enables you to read parquet. Web a vector of column names or a named.

Dask Read Parquet Files into DataFrames with read_parquet

Web this function enables you to read parquet files into r. Web ' parquet ' is a columnar storage file format. Web 1 answer sorted by: ' parquet ' is a columnar storage file format. Web read a parquet file into a spark dataframe.

Parquet file Explained

Usage spark_read_parquet( sc, name = null, path = name, options = list(), repartition =. Web read and write parquet files ( read_parquet () , write_parquet () ), an efficient and widely used columnar format read and write. Web a dataframe as parsed by arrow::read_parquet() examples try({ parquet_from_url(. Web read a parquet file description 'parquet' is a columnar storage file format..

How to resolve Parquet File issue

This function enables you to write parquet files from r. The simplest way to do this is to use the arrow package for this, which is available on cran. This function enables you to read parquet files into r. Web read a parquet file description 'parquet' is a columnar storage file format. This function enables you to read parquet.

CCA 175 Real Time Exam Scenario 2 Read Parquet File Write as JSON

This function enables you to read parquet files into r. 1 i'm completely new to r, but i've been able to read parquet files in our storage account. If specified, the elements can be binary for binarytype , boolean. Web read and write parquet files ( read_parquet () , write_parquet () ), an efficient and widely used columnar format read.

Understand predicate pushdown on row group level in Parquet with

Web read and write parquet files ( read_parquet () , write_parquet () ), an efficient and widely used columnar format read and write. Web read and write parquet files, an efficient and widely used columnar format read and write arrow (formerly known as feather) files, a. This function enables you to write parquet files from r. This function enables you.

You Can Read Data From Hdfs (Hdfs://), S3 (S3A://), As Well As The Local File System (File://).If You Are Reading.

This function enables you to read parquet. Usage spark_read_parquet( sc, name = null, path = name, options = list(), repartition =. Web ' parquet ' is a columnar storage file format. Web this function enables you to read parquet files into r.

1 I'm Completely New To R, But I've Been Able To Read Parquet Files In Our Storage Account.

Web read and write parquet files ( read_parquet () , write_parquet () ), an efficient and widely used columnar format read and write. Web read and write parquet files, an efficient and widely used columnar format read and write arrow (formerly known as feather) files, a. If specified, the elements can be binary for binarytype , boolean. 2 the problem is that databricks runtime 5.5 lts comes with sparklyr 1.0.0 ( released 2019.

Web 5 Rows Read A Parquet File.

The simplest way to do this is to use the arrow package for this, which is available on cran. I realise parquet is a column format, but with large files, sometimes you don't want. This function enables you to write parquet files from r. Web if specified, the elements can be binary for binarytype , boolean for booleantype, byte for bytetype , integer for.

Web Library (Arrow) If (!

Dir.exists (converted_parquet)) {dir.create (converted_parquet) ## this doesn't yet. Read_parquet( file, col_select = null, as_data_frame = true,. Web this function enables you to read parquet files into r. Web a dataframe as parsed by arrow::read_parquet() examples try({ parquet_from_url(.